1. Introduction

Human speech is a natural mode of communication that enables the exchange of highly complex, detailed, or sophisticated information between two or more speakers. This mode of communication not only conveys information related to a message, but also information related to speaker’s gender, emotions, or even speaker identity [1]. For many years there has been great interest from scientific community in speech signals, not only from the perceptual point of view but also from acoustics given the number of applications [1-4]. One of such applications is automatic speaker recognition, in which we seek to design a digital system to play the role of a human listener at identifying people based on that person’s voice [2,5,6]. Applications with voice biometrics are burgeoning as a secure method of authentication, which eliminates the common use of personal identification numbers, passwords, and security questions.

In automatic speaker recognition, there are two classical tasks that can be performed: speaker identification (SI) and speaker verification (SV). Identification is the task of deciding, given a speech sample, who among a set of speakers said the sample. This is an N-Class problem (given N speakers), and the performance measure is usually the classification rate or accuracy. Verification, in turn, is the task of deciding, given a speech sample, whether the specified speaker really said the sample or not. The SV problem is a two-class problem of deciding if it is the same speaker or an impostor requesting verification. Commonly, SV exhibits greater practical applications related to SI, especially in access control and identity management applications [2,5,7]. In this study, we address the speaker verification problem by using articulatory information.

The general structure of a speaker recognition system can be explained in three functional blocks as follows: i) an input block to measure important features parameters from speech signals; ii) a feature selection block or dimensionality reduction, which is a stage that relies on a suitable change (simplification or enrichment) of a representation to enhance the class or cluster descriptions [8]; iii) and the generalization/inference stage. In this final stage, a classifier/identifier is trained. The training process involves the parameter tuning of models to describe training samples (i.e., features extracted from speech recordings) [5,8,9].

With automatic speaker recognition, short time-based features such as mel-frequency cepstral coefficients combined with Gaussian mixture models (GMM) or latent variable inspired approaches [10,11] have been, for many years, the typical techniques for text-independent speaker recognition. While different approaches have been proposed to add robustness to speaker recognition systems against environmental noise [12], environmental noise and channel variability are still challenging problems. Hence, it is important to explore other information sources to extract complementary information and to add robustness to the systems. In this regard, articulatory information is known to be less affected by noise and contains speaker identity information, so it is prone to be used in automatic speaker recognition tasks [13]. Furthermore, several studies have shown articulatory related information is an important source of variability between speakers [13-15]. Nevertheless, measuring articulatory information is a complex task, and there are alternative strategies that have been proposed to estimate articulatory movements using acoustic information [16-18]. As an example, in [19,20] authors propose to use the trajectories of articulators estimated from the acoustic signal as a method to improve performance in speaker verification systems.

By contrast, there are research works that use direct measurements from different sensor types. For instance, electromagnetic articulography (EMA) data have been used for speaker recognition purposes, but EMA data acquisition is costly. In [21], recordings from 31 speakers were used, 18 males and 13 females, achieving 98.75% accuracy for speaker identification tasks by using EMA data. EMA uses sensor coils placed on the tongue (typically in 2 or 3 points) and other parts of the mouth to measure their position and movement over time during speech, which allows to capture data with great time resolution but limited spatial resolution [22]. Furthermore, articulatory movements can also be measured by using real-time vocal tract magnetic resonance imaging (rtMRI), and results suggest that articulatory data from rtMRI can also be used for speaker recognition purposes [23,24].

As an alternative to the previously described acquisition methods, ultrasound equipment is considerably cheaper and more portable. Furthermore, it can capture in real-time the mid-sagittal plane of the tongue but in a noninvasive way, in contrast to EMA. In addition, ultrasound does not add interference while acquiring acoustic signals as in MRI, and neither does it affect natural movements of articulators, as in EMA and MRI. Hence, ultrasound is a technique of higher cost-benefit when compared with other data acquisition methods for measuring articulatory information [25]. In fact, phonetic research has employed 2D ultrasound for a number of years for investigating tongue movements during speech. Motivated by these advantages, and under the hypothesis that articulatory information can be useful for speaker recognition tasks [20], this work proposes to analyze the potential use of articulatory information associated with the tongue superior contour for speaker verification.

2. Materials and methods

2.1. Data acquisition

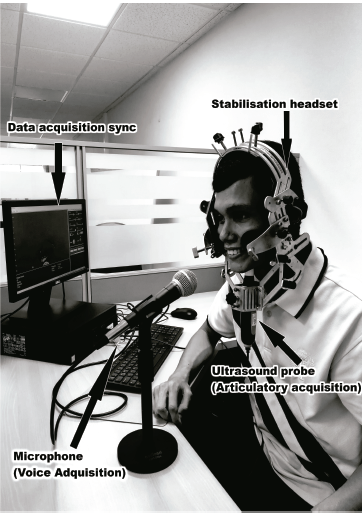

Our dataset contains recordings of 17 speakers (9 female and 8 male). The ages of participants range from 20 to 24 years, and participants are from the same geographical location, Bucaramanga in Colombia. All recordings contain information from the acoustic signal and the ultrasound video. For data acquisition we used the ultrasound probe PI 7.5 MHz Speech Language Pathology, (https://seemore.ca/portable-ultrasound-products/pi-7-5-mhz-speech-language-pathology-99-5544-can/) manufactured by Interson. To avoid artifacts, we used a helmet Articulate Instruments [26] to stabilize the probe [26,27], acoustic signals were recorded using the Shure SM58 microphone at 48000 Hz sampling rate, and the recordings were saved in WAV format. Fig. 1 shows the hardware used in present work. The ultrasound video was stored as a grey scale images sequence using the format JPG, with dimensions of 800x600 pixels. Each speaker uttered 30 Spanish sentences in a total of 340 seconds, and approximately 4000 images per speaker were recorded. For additional details, the interested reader can find information in [28].

Source: [28].

Figure 1 Equipment used for the parallel acoustic-articulatory data collection: microphone, personal computer, ultrasound probe and stabilization headset.

2.2. Articulatory features

The articulatory features were computed in two stages using the image sequence from the ultrasound video. First, the tongue contour is segmented using the software EdgeTrak [29], which uses an algorithm based on active contours [30]. Fig. 2 shows an example of the extracted contour from an ultrasound image. In several instances, it was necessary to apply a correction over the estimated contour.

Next, having N = 50 coordinates (x,y) describing the tongue contour for each frame, they are modeled. Having the tongue contour from an image, we propose to use two approaches:

Adjust directly a six-degree polynomial function using all N = 50 points, as shown in Fig. 3, and append to the 7 coefficients the horizontal coordinates from the initial x i and final x f points, resulting in a nine-dimensional feature vector αk = [p 0, p 1, …, p 6, x i, x f ] T describing the k-th - th ultrasound image, where p j denotes the j-th polynomial coefficient.

Take five equally spaced points with coordinates {𝑥𝑖,𝑦𝑖:𝑖=1,10,20,30,40,50} and arrange them into a ten dimensional feature vector [𝑥1,𝑥2,…,𝑥5,𝑦1,𝑦2,…,𝑦5]𝑇.

2.3. Acoustic features

The most popular analysis method for automatic speech recognition combines cepstral analysis theory [31] with aspects related to the human auditory system [2]. The so-called mel-frequency cepstral coefficients (MFCC) are the classical frame-based feature extraction method widely used in speech applications. Originally proposed for speech recognition, MFCC also became a standard for many speech-enabled applications, including speaker recognition [2, 32, 33]. In addition to the spectral information represented by the MFCCs, the temporal changes in adjacent frames play a significant role in human perception [34].

For MFCC computation, each speech recording is pre-emphasized and windowed in overlapped frames of length τ using a Hamming window to smooth the discontinuities at the edges of the segmented speech frame. Let x(n) represent a frame of speech that is pre-emphasized and Hamming-windowed. First, x(n) is converted to the frequency domain by an N point discrete Fourier transform (DFT). Next, P triangular bandpass filters spaced according to the mel scale are imposed on the spectrum. These filters do not filter time domain signals, they instead apply a weighted sum across the N frequency values, which allows to group the energy of frequency bands into P energy values. Finally, a discrete cosine transform (DCT) is applied to the logarithm filter bank energies resulting in the final MFCC coefficients. This process is repeated across all the speech frames, hence each speech recording is represented as a matrix of MFCC coefficients, being each arrow the respective coefficients of a single frame.

The temporal changes in adjacent frames play a significant role in human perception [34]. To capture this dynamic information in the speech, first- and second-order difference features (Δ and Δ Δ MFCC) can be appended to the static MFCC feature vector. In our experiments, 27 triangular bandpass filters spaced according to the mel scale were used in the computation of 13 MFCC features, and Δ and ΔΔ features were appended, resulting in a 39-dimensional feature vector. The interested reader is referred to [2, 33, 35] for more complete details regarding the computation and theoretical foundations of the MFCC feature extraction algorithm. The MFCC were computed on a per-window basis using a 25 ms hamming window with 10 ms overlap. We used the HTK toolkit to compute these features [36]. After dropping frames where no vocal activity was detected, cepstral mean and variance normalization was applied per recording to remove linear channel effects [37,38].

2.4. GMM Speaker modelling

For speaker modeling, the well-known Gaussian mixture model has remained in the scope of speaker recognition research for many years. The use of Gaussian mixture models for speaker recognition is motivated by these models’ capability to model arbitrary densities, and the individual components of a model are interpreted as broad acoustic classes [5]. A GMM is composed of a finite mixture of multivariate Gaussian components and the set of parameters denoted by λ. The model is characterized by a weighted linear combination of C unimodal Gaussian densities by the function:

Where 𝒪 is a D-dimensional observation or feature vector,α i is the mixing weight (prior probability) of the i-th Gaussian component, and N (.) is the D-variate Gaussian density function with mean vector µ i and covariance matrix Σ i

Let 𝒪={𝑜1,…,𝑜𝑘} be a training sample with K observations. Training a GMM consists of estimating the parameters

to fit the training sample 𝒪 while optimizing a cost function. The typical approach is to optimize the average log-likelihood (LL) of 𝒪 with respect to the model λ and is defined as [8]:

to fit the training sample 𝒪 while optimizing a cost function. The typical approach is to optimize the average log-likelihood (LL) of 𝒪 with respect to the model λ and is defined as [8]:

The higher the value of LL, the higher the indication that the training sample observations originate from the model λ. Although gradient-based techniques are feasible, the popular expectation-maximization (EM) algorithm is used for maximizing the likelihood with respect to given data. The interested reader is referred to [8] for more complete details. For speaker recognition applications, first, a speaker-independent world model or universal background model (UBM) is trained using several speech recordings gathered from a representative number of speakers. Regarding the training data for the UBM, selected speech recordings should reflect the expected alternative speech to be encountered during recognition. This applies to both the type and the quality of speech, as well as the composition of speakers. Next, the speaker models are derived by updating the parameters in the UBM using a form of Bayesian adaptation [39]. In this way, the model parameters are not estimated from scratch, with prior knowledge from the training data being used instead. It is possible to adapt all the parameters, or only some of them from the background model. For instance, adapting only the means has been found to work well for speaker recognition, as was shown in [39], according to the authors, there was no benefit in updating parameters such as covariance matrix or priors associated to each gaussian.

2.5. i-vector modeling approach

The i-vectors extraction technique was proposed in [10] to map a variable length frame-based representation of an input speech recording to a small-dimensional feature vector while retaining the most relevant speaker information. First, a C-Component GMM is trained as an universal background model (UBM) using the Expectation -- Maximization (EM) algorithm and the data available from all speakers from the train set or background data, as described in the previous section. Speaker and session-dependent supervectors of concatenated GMM means are modeled as:

where m is the speaker- and channel-independent supervector; T ∈ℝ CF×D is a rectangular matrix of low rank covering the important variability (total variability matrix) in the supervector space; C,F and D represent, respectively, the number of Gaussians in the UBM, the dimension of the acoustic feature vector and the dimension of the total variability space; and finally, 𝜙∈ℝ𝐷×1 is a random vector with density 𝒩(0,𝐼) and referred to as the identity vector or i-vector [12]. A typical i-vector extractor can be expressed as a function of the zero- and first-order statistics generated using the GMM-UBM model, and it estimates the Maximum a Posteriori (MAP) point estimate of the variable 𝜙. This procedure is complemented with some post-processing techniques such as linear discriminant analysis (LDA), whitening, and length normalization [40].

3. Experimental setup

During testing, in a verification scenario, we consider a testing sample 𝒪 t and a hypothesized speaker with model λ hyp and the task of the speaker verification system is to determine if 𝒪𝑡 matches the speaker model, when using a GMM+MAP adaptation based system. When using the i-vector/PLDA approach, first it is necessary to extract the i-vector 𝜙 test from the respective testing sample, 𝒪𝑡. Next, we consider 𝜙 test and a hypothesized speaker with a set of averaged i-vectors 𝜙 enrol which is the speaker model, and the task of the speaker verification system is to determine if 𝜙 test matches the speaker model. There are two possible hypotheses: 1) The testing sample is from the hypothesized speaker, and 2) the testing sample is not from the hypothesized speaker.

For the experiments herein, we use the probabilistic linear discriminant analysis (PLDA) approach for scoring, as recommended in [41]. The PLDA model splits the total data variability into within-individual (U) and between-individual (V) variabilities, both residing in small-dimensional subspaces. Originally introduced for face recognition, PLDA has become a standard in speaker recognition. The interested reader is referred to [42] for further details regarding PLDA formulation.

The decision can be made by computing the log-likelihood (score) between the two hypotheses; details can be found in [5,41,42]. For the experiments herein, the dimensionality of LDA transformation matrix V and U were fixed to the number of train speaker set and, M =0, respectively; where the LDA model is tuned accordingly per feature set.

In this work, we used all sessions from 15 speakers to train the UBM, as well as 24 recordings from two speakers for enrollment, and for testing, we used six recordings per client, see Table 1. Finally, the performance of the system was measured using the EER (Equal Error Rate), a common performance measure for speaker verification systems [7].

Sixteen Gaussian distributions form the GMM of the UBM model, whose parameters were adjusted by the EM (Expectation-Maximization) optimization algorithm. In order to evaluate the method, it is performed cross-validation in respect to speakers (15 for training, 2 for testing). For each cross-validation iteration, 30 phrases belonging to those 15 speakers are included for training the UBM model. In total, 105 experiments were performed, with a single UBM per experiment. m05, m06 speakers were excluded from the training set because they contain less than 30 sentences. For each experiment, 24 sentences belonging to the two remaining speakers were utilized in order to find the corresponding two individual models. The remaining 6 sentences per speaker are used to carry out the verification test. It is important to note that for each experiment, we have 10 different ways of selecting the sentences (24 for the individual models and 6 for testing). The final EER value can be estimated by averaging those 1050 EER values corresponding to the tests.

Herein, we implemented two approaches for speaker verification: the classical GMM-UBM+MAP adaptation approach and the most recent i-vector + PLDA paradigm; for this purpose, we used the MATLAB tool MSR Identity Toolbox [38]. Having acoustic feature vectors such as the MFCC, articulatory features from the polynomial modeling of the tongue contour, and the fusion of these features, we train a GMM as UBM for two purposes: i) to apply MAP adaptation and obtain a speaker model, and ii) to compute the zero- and first-order statistics (Baum welch statistics) and to train the i-vector extractor. The total variability matrix dimension is set to D = 16, and the i-vectors dimension was reduced to 14 using LDA.

4. Results

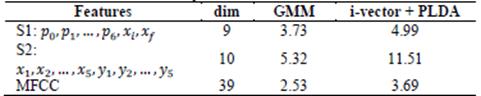

Table 2 presents the results using articulatory features, and these results are compared with the classical MFCC feature set. In the table, the feature set S1 refers to the polynomial approach, where the feature vector is composed of seven coefficients plus the initial and final horizontal coordinates of the tongue contour (𝑝0,𝑝1,…,𝑝6,𝑥𝑖,𝑥𝑓). The feature set S2 refers to the feature set, where we take five equally spaced points and arrange both horizontal and vertical coordinates in a single feature vector {𝑥𝑖,𝑦𝑖:𝑖=1,10,20,30,40,50}.

Table 2 EER (%) comparison of different feature sets using GMM+MAP adaptation and i-vectors + PLDA based systems.

Source: The Authors.

As can be seen, best results were obtained using the classical GMM/MFCC paradigm, which achieves an EER = 2.53%. Results achieved by articulatory features, on the other hand, are not as good as results presented by MFCC feature vectors, but these results show that articulatory features contain highly discriminative information associated to speaker identity. As can be seen, the EER = 3.73 achieved by the set S1 has and absolute difference of 1.2% relative to results achieved by MFCC features. It is important to clarify that in the case that S1 and S2 did not contain discriminative information, the verification results would not be comparable with those achieved with the MFCC, which have already been shown as discriminative characteristics in numerous works. These results are in line with previously reported research in [43], where the EER is around 4.7 - 6.8 % using information from the tip and middle of the tongue using an EMA device. It is also important to point out that the GMM+MAP adaptation based systems achieved better performance than the i-vector+PLDA approach. This can happen due to the lack of data for training the i-vector extractor [20], and there is not enough variability to benefit from this technique.

Features described in Sections 2.2 and 2.3 place emphasis on different aspects of the speech production process, thus likely contain complementary information. This hypothesis has motivated the exploration of fusion at different levels to combine the strengths of feature representations extracting complementary information [7]. One way to combine the strengths of these features is by combining them at the frame level by concatenating articulatory features to the MFCC feature vector. For these experiments, it is necessary to synchronize the feature sets to guarantee the temporal information to be aligned. This is done by modifying the frame size for computation of the MFCC to 78 ms.

Results from this fusion strategy are presented in Table 3. As can be seen, important improvements were attained when comparing with results presented in Table 2. In particular, there are some losses with respect to the GMM+MAP adaptation based system when combining MFCC with S1 or S2. However, the speaker verification system based on i-vectors + PLDA and using as feature vectors the fusion of MFCC + S1 achieves an EER = 2.11%, which is the lowest EER for all systems we are comparing. These results are also in line with previously reported research in [20] where fusion was used as a strategy to enhance performance in a speaker verification system. Finally, to complement results presented in Tables 2 and 3, Fig. 4 depicts the comparison of all systems using the DET curve [44]. As can be seen, the MFCC+S1 / GMM-UBM based system presents the best performance when compared to other approaches.

5. Conclusions

Herein, we have addressed the problem of speaker verification (SV) using articulatory information extracted from the tongue movements. According to our results, articulatory features proposed in this work contain highly discriminative information associated to speaker identity, which are useful for speaker verification purposes. It is also important to highlight that these features contain complementary information that can be used in a fusion scheme with typical acoustic features, such as MFCC, improving speaker verification performance. Features extracted from the tongue contour using a polynomial approach exhibit promising results, achieving an EER = 3.73%, which is higher to the EER achieved by MFCC alone (2.53%), but still comparable.

Even though articulatory information has been used previously for speaker recognition purposes, this work presents an approach that reduces interference and cost while acquiring the signal based on ultrasound images. We also show the potential of this approach to extract complementary information to typical acoustic features, and this is shown when fusing features in a single vector, which results in a better performance of the speaker verification system.

On the other hand, for the task of estimating articulatory information, it is possible to use speaker independent acoustic-to-articulatory inversion methods. However, these methods have been evaluated in a small amount of speakers for the task at hand. In this regard, ultrasound technology allows to measure articulatory information directly from more speakers and at a reduced cost, thus, obtaining better acoustic-to-articulatory models. These new models could be used as a complement for acoustic features. For future work, we propose to implement an speaker independent acoustic-to-articulatory inversion method for feature extraction to be used in speaker recognition systems.