1. INTRODUCTION

In recent decades, the scientific and educational communities have stressed the importance of incorporating Computational Thinking (CT) into curricula across all levels of education. Nonetheless, given its status as an emerging field, there is still no consensus on its definition and practical application. Consequently, a variety of approaches have been adopted to integrate it in students’ learning process. This lack of a standardized definition for CT makes it challenging to design methods and tools for its evaluation [1]. Moreover, the rapid advancement of information and communication technologies underscores the need for 21st-century individuals to develop digital skills [2], [3], which brings about changes in how people think, act, communicate, and solve problems.

From a conceptual standpoint, various definitions have been put forth for CT. For instance, [4] serves as a starting point, defining it as the process of applying basic computer science principles to solve problems, design systems, and understand human behavior. [5], for his part, analyzed the different definitions of CT that have been proposed from the generic, operational, psychological-cognitive, and educational-curricular perspectives. In their literature review, [6] suggested classifying the definitions based on two approaches. The first approach is concerned with the relationship between computational concepts and programming, where authors [7]-[9] stand out. The second approach pertains to the set of competencies that students should develop, encompassing domain-specific knowledge and problem-solving skills. In this latter approach, the authors highlight the proposals of the International Society for Technology in Education (ISTE) and the Computer Science Teachers Association (CSTA) [10], along with [11] and [12].

Several initiatives have been developed to integrate CT into curricula, as well as tools for its accurate and reliable assessment. These tools include questionnaires [13]-[15], task-based tests [16], coding activities [17], and observation. Nevertheless, for widespread application across various educational levels, it is imperative to enhance the measurement of this construct using instruments with psychometric properties.

This systematic review was motivated by the need to measure CT and identify the instruments that have been designed for its assessment. Specifically, the goal is to analyze a set of bibliometric indicators and variables of interest, such as the type of instrument, number of items, target population, sample size, evidence of pilot testing, identification of skills/competencies, theoretical foundations, and psychometric properties.

1.1 Literature review

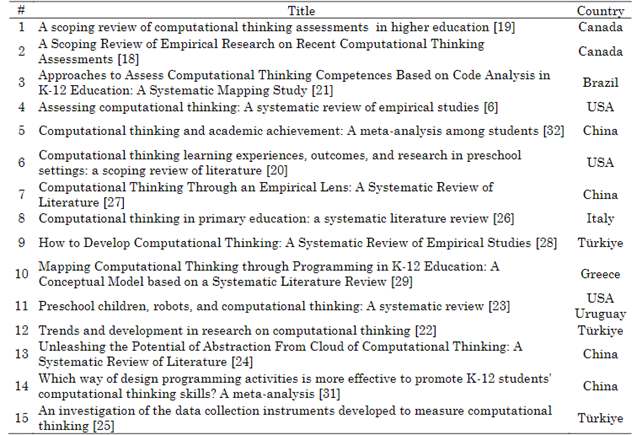

An examination of previous studies on the use of CT assessment tools provided valuable insights into the current landscape of this field. The search yielded 15 reviews, classified into different categories: scoping reviews [18]-[20], systematic or bibliometric mappings [21], [22], systematic reviews [6], [23]-[29], and meta-analyses [30], [31]. The analysis of these reviews underscored the need to identify the instruments employed for measuring CT, their psychometric properties, and the various variables associated with CT.

The retrieved review articles contributed to shedding light on research related to CT assessment. For instance, a scoping review of CT assessments in higher education [19] unveiled empirical studies focusing on CT assessments in post-secondary education. The majority of the analyzed instruments sought to measure CT skills by combining various dimensions, including concepts, practices, and perspectives. Among the skills frequently evaluated in these studies were algorithmic thinking, problem-solving, data handling, logic, and abstraction. However, it is worth noting that only four of these instruments provided sufficient evidence of their reliability and validity.

In another scoping review of empirical research on recent CT assessments [18], the authors classified features related to graphical or block-based programming, web-based simulation, robotics-based games, tests, and scales. Most studies in this review adopted a quasi-experimental approach, with only a few providing evidence of their validity. This review highlighted the need to carry out assessments aimed at different levels of higher-order thinking skills.

In their systematic review [6], evaluated 96 articles, considering variables such as educational level, subject matter domain, educational setting, and assessment tool. The findings emphasized the need for more assessments targeting high school students, college students, and teachers, in addition to evidence of the validity and reliability of the instruments. [25], for their part, analyzed 64 studies on CT measurement, identifying the psychometric properties of instruments primarily aimed at determining levels and measuring skills.

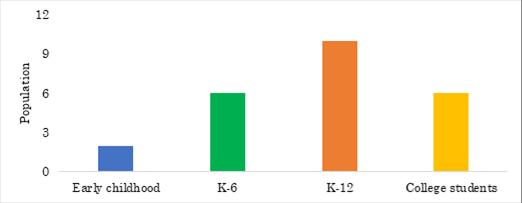

In the identified scoping reviews, mapping reviews, and meta-analyses, Scopus, ScienceDirect, ERIC, and Web of Science (WOS) were the most frequently consulted sources. As for the target population, Figure 1 shows that the most commonly selected population was students in K-12 educational settings. No reviews targeting teachers were found in the analysis.

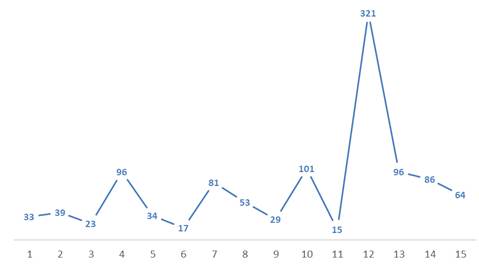

Out of the 15 reviews, the one conducted by [22] included the largest number of sample articles (321 in total), while [23] had the smallest sample (15 articles). The sample sizes of the other reviews ranged from 17 to 101 articles. Figure 2 illustrates the number of articles included in the 15 reviews.

Additionally, Table 1 lists the titles of the review articles, along with the country where the research was conducted.

2. METHODOLOGY

This systematic review followed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statement [33]. Such a protocol involves the following steps: (a) defining the research questions, objectives, and study variables (bibliometric indicators and variables of interest); (b) conducting a literature search (definition of search strings, period of analysis, inclusion and exclusion criteria, and sources of information, and study selection); and (c) identifying relevant articles.

2.1 Research questions, objectives, and variables

The following research questions were proposed for this systematic review:

Which studies have used instruments to assess CT?

What tools have been proposed for measuring CT?

What population is targeted for instrument application?

What constructs or skills are evaluated or measured?

What are the psychometric properties of the employed instruments?

What statistical methods were employed for analyzing psychometric properties?

What factors are considered when measuring CT?

All these questions serve to identify the tools that have been used for CT assessment, their psychometric properties, and the evaluated skills. The proposed variables were divided into two categories: (i) bibliometric indicators, encompassing title, source of information, publication year, country, language, authors, journal, quartile, and the Scientific Journal Rank (SJR) and Journal Citation Reports (JCR) indices; and (ii) variables of interest, including type of instrument, number of items, age of target population, evaluated skills/competencies, theoretical foundations, authors, sample size, pilot testing, and method for determining instrument validity and reliability.

2.2 Literature search

To conduct the search, the following eight search strings were formulated, incorporating key concepts such as computational thinking, measurement, and instruments, while adhering to the syntax required by the employed databases:

"Pensamiento Computacional" AND medición

"Computational Thinking" AND measuring

"Computational thinking" + "measuring instruments"

"Computational thinking" + "measurement"

"Computational thinking" + "measure instruments"

"Computational thinking" + "measurement tool"

"Computational thinking" AND ("measur* instruments" OR "measur* tool*")

"Computational thinking" AND (“assess” OR “validity” OR “reliability” OR “test” OR “scale”)

The search spanned from 2012 to 2022 because, as indicated by [34], this is when CT started to consolidate as a construct. For the search, five sources of information were consulted: ScienceDirect, EBSCO Discovery, Scopus, WOS, and Springer.

Regarding exclusion and inclusion criteria, only research articles and reviews were considered for analysis, while publications in book formats, posters, conference proceedings, or articles that did not employ a specific instrument for measuring CT were excluded.

2.3 Identified articles

Initially, the search yielded 439 articles. After removing duplicates, 204 articles remained. Following further screening for relevance, 115 articles were retained. Finally, by applying exclusion criteria, a total of 52 articles were selected for the systematic review. Figure 3 provides a summary of the articles identified at each stage of the search process, which was conducted following the PRISMA statement.

3. RESULTS AND DISCUSSION

3.1 Analysis of bibliometric indicators

3.1.1 Consulted databases

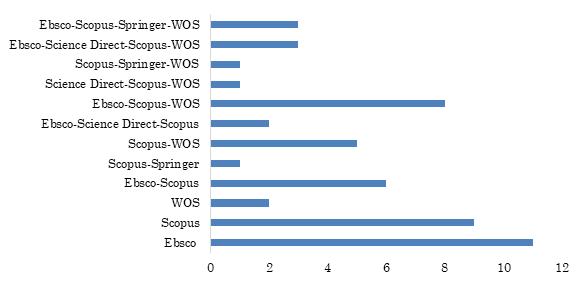

The majority of the articles (approximately 63.4 %) were found in Scopus. Figure 4 shows the number of articles retrieved from each consulted database, with several appearing in multiple sources.

3.1.2 Title keywords

According to the analysis, computational thinking was the most prevalent term in the titles of the examined articles, often accompanied by valid, scale, evaluate, and test, all of which allude to important features of the measurement instruments.

3.1.3 Publication year

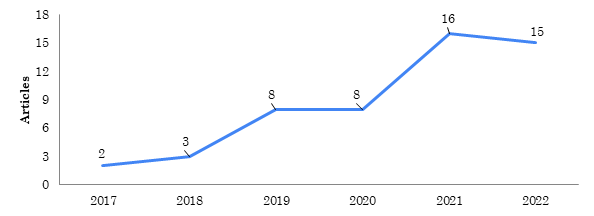

As mentioned earlier, the search spanned from 2012 to 2022. Remarkably, none of the five sources yielded publications related to instrument construction before 2017. Figure 5 depicts the increase in the number of publications dedicated to CT measurement instruments throughout the analyzed period.

3.1.4 Country where the research was conducted

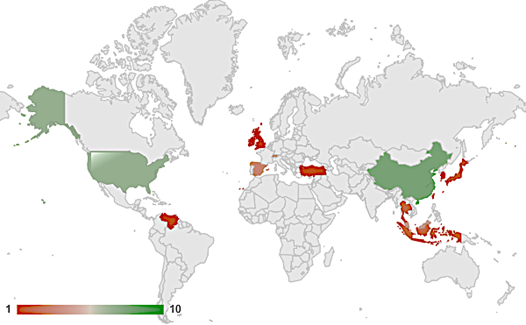

Türkiye was the country with the highest number of articles-ten in total-followed by China and the United States, with eight and seven articles, respectively. Only one study was carried out in Latin America, specifically in Venezuela. Figure 6 displays the distribution of articles by country.

3.1.5 Language

English was the most prevalent language, with 90 % of the articles being written in this language. Spanish accounted for 4 %, Turkish 4 %, and Japanese 2 %.

3.1.6 Authors

Table 2 presents the most prominent authors based on the number of published articles.

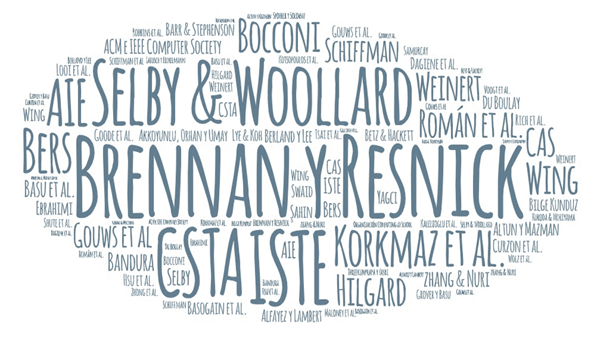

Also, one important aspect considered in the analysis was the most cited authors in the analyzed articles (see Figure 7). Prominent authors include Brennan and Resnick, Selby and Woollard, the International Society for Technology in Education (ISTE), the Computer Science Teacher Association (CSTA), Román et al., and Korkmaz et al. The latter authors are notable references because the instruments they proposed-the Computational Thinking Test (CTt) and the Computational Thinking Scale (CTS)-are frequently employed for CT measurement.

3.1.7 Journal, quartile, and JCR indices

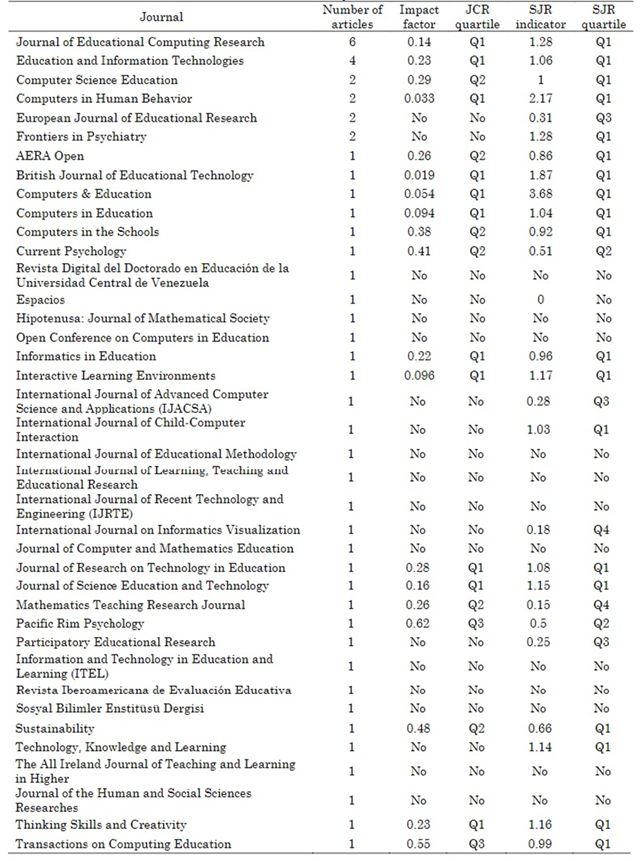

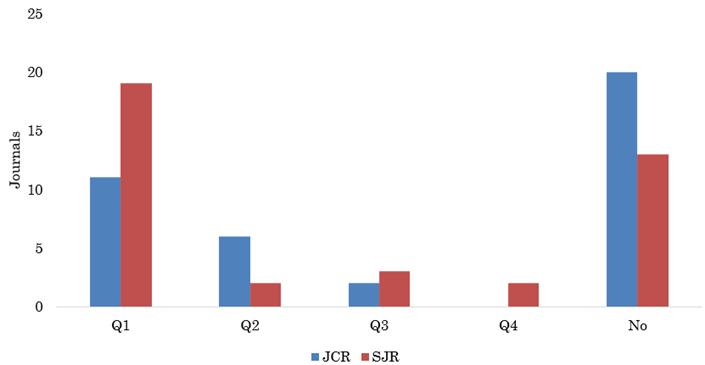

In the analysis, two impact indicators evaluating the excellence of published content were employed. JCR, on the one hand, primarily focuses on citation counts, providing the impact factor and quartile of a journal. SJR, on the other hand, considers the quality, relative importance of citations, and quartile of a journal. According to the findings, the Journal of Educational Computing Research and Education and Information Technologies stood out as the most productive journals, with six and four publications, respectively. Regarding the two indices and quartiles, 21 % of the journals had no classification in any of the indices. Information on each journal is provided in Table 3. The most prominent journals, ranked by the number of CT-related publications, are listed in [22].

Figure 8 summarizes the quartiles assigned to the journals in which the articles were published. A total of 40 different journals were identified, of which 47.5 % have been classified in a JCR quartile and 65 % have been classified with the SJR indicator.

3.2 Analysis of the variables of interest

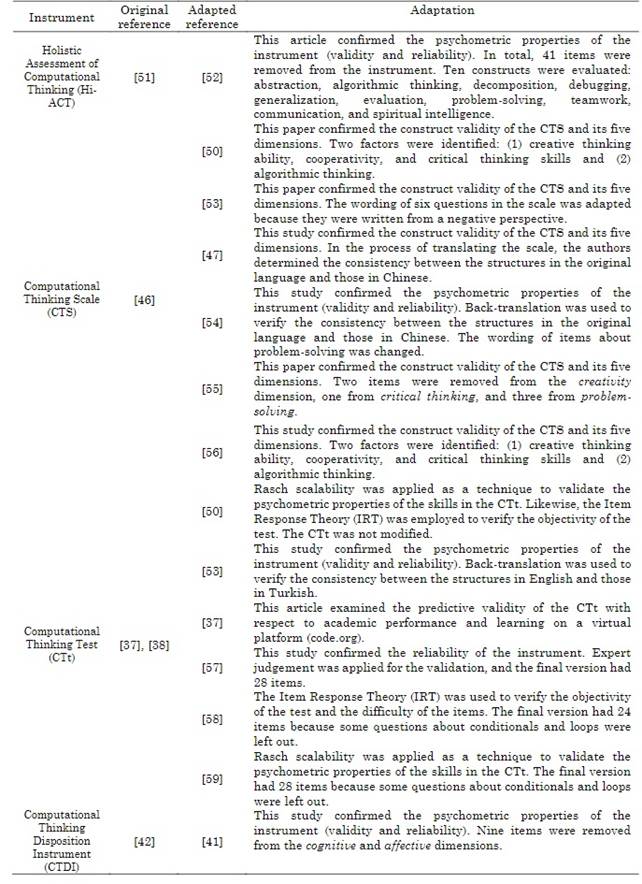

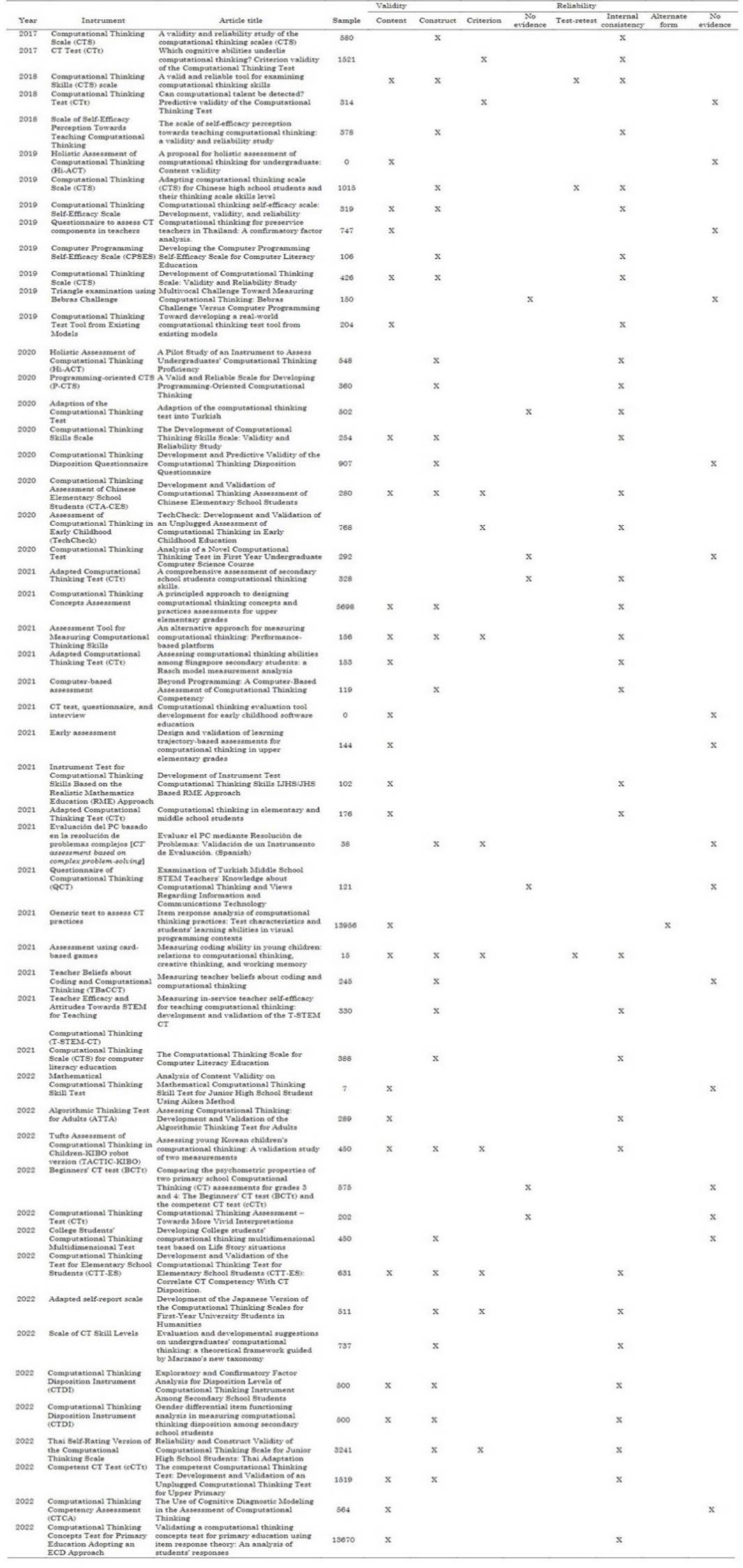

This systematic literature review included 52 articles, of which only 40 introduced new instruments. The remaining 12 articles examined adaptations of the latter. Table 4 lists the instruments, the reference to the original instrument, and the reference to the adapted version.

The evidence reported above regarding the CTS suggests that all the adapted versions of this instrument underwent thorough validation of their psychometric properties. In the case of the CTt, there have been some linguistic adaptations and changes to a number of items.

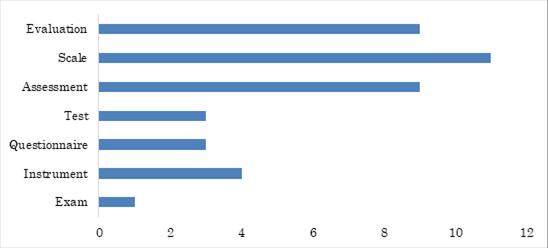

3.2.1 Type of tool

The 40 CT assessment tools analyzed in this paper can be classified as shown in Figure 9. This classification is based on the name given by each author in their article. Scales were the most common format (28 %), followed by assessments (22.5 %) and tests (22.5 %).

3.2.2 Number of items

In these articles, 5.8 % of the instruments have up to ten items; 73 %, between 10 and 30 items; 9.7 %, between 31 and 40; and 11.5 %, more than 40.

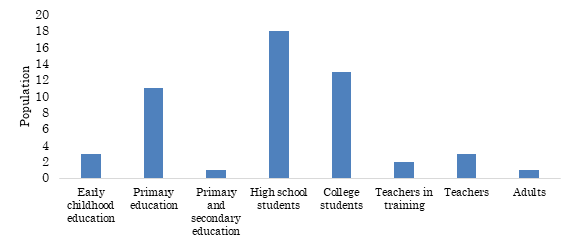

3.2.3 Study population

In terms of study population, 25 % of the papers focused on college students, 3.8 % on teachers in training, 35 % on high school students, 21 % on primary students, and 6% on early childhood education. Among these publications, 5.7 % are about teachers. Figure 10 presents the frequency of each type of study population.

3.2.4 Skills/competencies assessed in CT

The diversity of definitions of CT indicates that the articles have addressed this construct from the perspectives of different skills or competencies. The most frequent skills/competencies they have discussed are abstraction, logarithmic thinking, problem-solving, decomposition, debugging, algorithms, and modularizing. Based on these 40 instruments, abstraction, logarithmic thinking, problem-solving, debugging, modularizing, and affective competencies have been evaluated since 2017. Decomposition was included in 2018. Some of the instruments assess cognitive skills along with affective and social skills, as well as attitudes. Table 5 presents the constructs assessed in each of the 40 instruments.

3.2.5 Validity and reliability

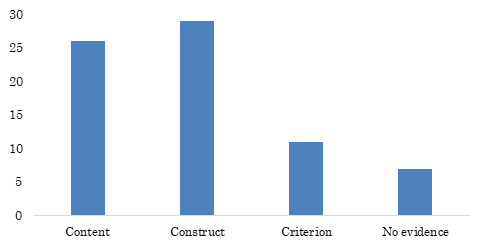

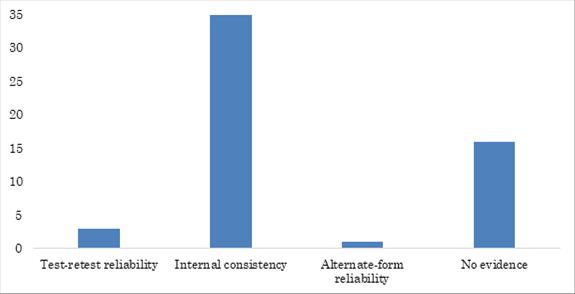

It was found that 87 % of the instruments showed evidence of validity; and 69 %, evidence of reliability. Figures 11 and 12 display the types of validity and reliability reported in the articles.

Regarding the evidence of reliability, 67 % of the articles refer to internal consistency, 30 % do not specify the method used, 5 % refer to test-retest reliability, and 2 % mention alternate-form reliability. Table 6 details the types of validity and reliability employed in each paper.

3.3 Discussion

This systematic literature review aimed to identify studies that have introduced instruments for measuring CT, as well as bibliometric variables and other variables of interest to delve deeper into this object of study.

A total of 52 research papers and 15 meta-analyses, mapping reviews, and systematic literature reviews were selected. Four studies are noteworthy ([6], [18], [19], [25]) because they make evident what supports, adds value, and justifies this literature review: the need for an analysis of the psychometric properties of those instruments.

For this purpose, it was necessary to establish what methods have been used to determine their validity and reliability. This process can be addressed from the perspective of the Classical Test Theory (CTT) and the Item Response Theory (IRT). CTT is based on methods that evaluate the quality of tests by measuring the internal consistency and validity of the content, criterion, and construct. On the other hand, the IRT offers a more advanced approach as it considers the individual characteristics of the items and participants, enabling a more accurate estimation of the skills under evaluation and a more sensitive assessment of performance. Integrating both theories allows for a more comprehensive and reliable evaluation of tests, facilitating decision-making in various educational and professional contexts. This review includes several articles that refer to adaptations of two instruments: The Computational Thinking Test (CTt) and the self-report Computational Thinking Scale (CTS). All the adaptations of the CTS [46] and CTt [37], [38] have shown evidence of psychometric properties.

Considering the authors of the 52 articles and those most cited within them, Marcos Román-Gonzaléz and Özgen Korkmaz were found to be at the top of both lists, demonstrating their extensive research experience in CT assessment.

The protocol for this review included six questions that can be used to delve deeper into this discussion:

What is the target population of the instrument?

The 52 papers analyzed in this study address populations at various educational levels, ranging from early childhood education to college, in addition to teachers in training. Only one instrument, the Algorithmic Thinking Test for Adults (ATTA), was exclusively designed for adults. In general, the target populations were high school (35 %) and college (25 %) students. Two articles [60], [61] focused on teachers in training; three [62]-[64], on teachers; and one [49], on adults. Given this distribution, there is an open space for research on the design and validation of instruments that assess CT in adults, teachers, or early childhood.

What instruments have been proposed to measure CT?

The selected studies propose 40 tools to measure CT in different formats (exam, instrument, questionnaire, test, assessment, scale, and evaluation)-with scales being the most common. Three articles included three ways to assess CT using complements to the instrument: (1) a web interactive application [48], (2) tasks from Bebras cards combined with KIBO kits [65], and (3) a game-based strategy [16]. The latter two were used to conduct research in early childhood education. This inventory of types, formats, constructs evaluated, number of items, and other information about the instruments can aid in making decisions for future research on CT measurement. It is worth noting that 2017 marked a milestone with the first publication of an instrument designed to assess CT. This differs from [66] perspective on the matter. It should be clarified that this information is based on a systematic literature review that did not identify any other instruments prior to that year.

What constructs or skills do the instruments assess?

The instruments assess various skills associated with CT, including concepts, attitudes, and procedures. Some authors have also included feelings. Several skills were assessed in the articles reviewed here, which means that the construct can be evaluated in different contexts. This also indicates that future research should include skills, concepts, attitudes, procedures, and feelings for a comprehensive CT assessment [67], [68]. The results of this review are in line with previous reviews [18], [19], [24], [29], [32], which established that algorithmic thinking, problem-solving, and abstraction are the CT skills most commonly assessed. Likewise, it was found that computational concepts (sequences, conditionals, and loops) are widely assessed in various educational environments, which is consistent with [20], [23], [27].

What are the psychometric properties of the instruments?

The psychometric properties of the instruments were studied from the perspectives of validity and reliability. Of the instruments assessed, 86 % demonstrated validity and 69 %, reliability. Content and construct validity were predominant. Regarding reliability, internal consistency was the most commonly used criterion in the selected studies. All articles that adapted CTS presented evidence of its psychometric properties. Among those in which the CTt was adapted, only one did not provide evidence of validity. These results differ from those reported in [6], where the authors noted that an important number of CT assessments lacked evidence of reliability and validity.

What statistical methods were used to analyze the psychometric properties?

To determine the validity of the instruments, the most common statistical methods were correlation, Exploratory Factor Analysis (EFA), and Confirmatory Factor Analysis (CFA). In most cases, reliability was tested using Cronbach’s alpha. In the adaptations of the CTS [47], [50], [53]-[56], the most popular validation method was CFA. CFA also appeared in [25] as the most common method to validate scales.

What elements are considered in CT assessment according to the literature reviews?

CT assessment focuses on the basic concepts of educational technology, highlighting its foundations and contributions to the field of teaching [69].Table 7 below outlines the elements of CT assessment that were considered in the 15 systematic literature reviews, mapping reviews, and meta-analyses.

Skills, attitudes, and perceptions are dimensions widely used to measure CT. Some studies [18]-[22], [27] focus on measuring CT through computational practices and concepts. All these reviews cite Brennan and Resnick’s [7] curriculum guide as a foundational resource for CT. Other studies [6], [18], [19], [23], [29] use self-report scales to analyze students’ perceptions and preferences. Only one systematic literature review [24] defines abstraction from a multi-dimensional perspective.

5. CONCLUSIONS

This study reviewed 52 research articles about CT assessment and measurement published between 2012 and 2022 in academic journals. Additionally, it analyzed scoping reviews, systematic mapping reviews, and literature reviews, which revealed (a) the interest in consolidating the evidence on CT assessment and (b) research gaps for this review. Consequently, this literature review was conducted to learn about the psychometric properties of CT assessment and measurement instruments, as well as CT-related variables.

This systematic review implemented a process that ensures the repeatability of the review protocol. The research questions helped to define the limits of the bibliometric variables and variables of interest. The bibliometric variables indicate that the number of articles on CT measurement instruments has increased since 2019. Most documents on CT measurement have been published in Türkiye, the US, and China. Based on the JCR index, 27.5 % of the articles were published in Q1 journals; and based on the SJR index, 47.5 % were featured in Q1 outlets. There is no evidence of CT measurement instruments in Colombia. Regarding key authors, Brennan and Resnick, as well as Selby and Woollard, are commonly cited due to their widely recognized CT curriculum designs. Marcos Román González and Özgen Korkmaz stand out for their numerous publications and the international adaptation of their instruments. The Computational Thinking Scale (CTS) has been adapted and psychometrically validated in Europe and Asia, while the Computational Thinking Test (CTt) has been adapted and its validity has been established in the same regions. However, no adaptations of these instruments to Latin American countries were identified. As the instruments were mostly applied to high school and college students, future research should address other populations, such as young children or adults.

The results of this review highlight the diverse range of CT skills that can be evaluated. Among these skills (that have a multidimensional origin), algorithmic thinking, cognitive skills, and problem-solving capabilities are the most common. Computational capabilities are also widely assessed, especially concepts that are directly related to computer programming, such as sequences, conditionals, loops, and events. It should be noted that abstraction has been commonly evaluated across all populations, but there is little scientific evidence of a rigorous evaluation of this construct. Only Ezeamuzie et al. have formally operationalized this skill.

This review revealed a variety of instruments to measure CT-with scales being the most frequently used format. This suggests that CT should be assessed in a comprehensive manner by addressing a wide range of associated skills, concepts, attitudes, and procedures. Most reviewed instruments demonstrated both validity and reliability, with content and construct validity, as well as internal consistency, being the predominant criteria. The statistical methods most commonly employed to analyze these properties are correlation, Exploratory Factor Analysis (EFA), Confirmatory Factor Analysis (CFA), and Cronbach’s alpha.

This literature review makes a contribution to future studies by demonstrating the progress made in CT assessment through the use of measurement instruments with strong psychometric properties. In conclusion, this review accomplished its objective, i.e., it identified the tools that have been used to measure CT, along with their psychometric properties and the skills they assess.