Services on Demand

Journal

Article

Indicators

-

Cited by SciELO

Cited by SciELO -

Access statistics

Access statistics

Related links

-

Cited by Google

Cited by Google -

Similars in

SciELO

Similars in

SciELO -

Similars in Google

Similars in Google

Share

Avances en Psicología Latinoamericana

Print version ISSN 1794-4724

Av. Psicol. Latinoam. vol.31 no.1 Bogotá Jan./Apr. 2013

Influences of sex, type and intensity of emotion in the ecognition of static and dynamic facial expressions*

Influencias del sexo, tipo e intensidad de la emoción en el reconocimiento de expresiones faciales estáticas y dinámicas

Influências do sexo, tipo e intensidade da emoção no reconhecimento de expressões faciais estáticas e dinámicas

NELSON TORRO-ALVES*

IZABELA ALVES DE OLIVEIRA BEZERRA**

RIANNE GOMES E CLAUDINO***

THOBIAS CAVALCANTI LAURINDO PEREIRA****

* This work received financial support from CNPq, Grant n° 479366/2011-0 (Edital 14/2011), delivered to the first author.

** Graduado em Psicologia pela Universidade de São Paulo Ribeirão Preto, Mestrado e Doutorado em Psicobiologia pela Universidade de São Paulo, atualmente é Professor Adjunto II e Vice-chefe do Departamento de Psicologia da Universidade Federal da Paraíba.

Correo electrónico: nelsontorro@yahoo.com.br

*** Graduanda em Psicologia pela Universidade Federal da Paraíba, atualmente está engajada no Programa de Graduação Sanduíche sob a orientação do Professor DR. Nelson Torro Alves. e Prof. Aznar Casanova na Universidade de Barcelona/Espanha.

Correo electrónico: izabela_bezerra@yahoo.com

**** Bolsista de Graduação Sanduíche no Exterior - Universidade de Barcelona, Espanha. Graduanda em Psicologia na Universidade Federal da Paraíba, membro do Laboratório de Ciência Cognitiva e Percepção Humana - LACOP.

Correo electrónico: riannegclaudino@hotmail.com

***** Graduando do curso de Psicologia pela Universidade Federal da Paraíba, Atualmente, integrante do Laboratório de Percepção, Neurociências e Comportamento (LPNeC).

Correo electrónico: thobiascavalcanti@gmail.com

Para citar este artículo: Alves, N. T., Bezerra, I. A. O., Claudino, R. G., & Pereira, T. C. L. (2013). Recognition of static and dynamic facial expressions: Influences of sex, type and intensity of emotion. Avances en Psicología Latinoamericana, 31 (1), pp. 192-199.

Fecha de recepción: 1° de agosto de 2012

Fecha de aceptación: 17 de febrero de 2013

Abstract

Ecological validity of static and intense facial expressions in emotional recognition has been questioned. Recent studies have recommended the use of facial stimuli more compatible to the natural conditions of social interaction, which involves motion and variations in emotional intensity. In this study, we compared the recognition of static and dynamic facial expressions of happiness, fear, anger and sadness, presented in four emotional intensities (25%, 50%, 75% and 100%). Twenty volunteers (9 women and 11 men), aged between 19 and 31 years, took part in the study. The experiment consisted of two sessions in which participants had to identify the emotion of static (photographs) and dynamic (videos) displays of facial expressions on the computer screen. The mean accuracy was submitted to an Anova for repeated measures of model: 2 sexes x [2 conditions x 4 expressions x 4 intensities]. We observed an advantage for the recognition of dynamic expressions of happiness and fear compared to the static stimuli (p < .05). Analysis of interactions showed that expressions with intensity of 25% were better recognized in the dynamic condition (p < .05). The addition of motion contributes to improve recognition especially in male participants (p < .05). We concluded that the effect of the motion varies as a function of the type of emotion, intensity of the expression and sex of the participant. These results support the hypothesis that dynamic stimuli have more ecological validity and are more appropriate to the research with emotions.

Keywords: Facial expressions, emotion, motion

Resumen

Se ha cuestionado la validez ecológica de expresiones faciales estáticas e intensas en el reconocimiento de emociones. Estudios recientes han propuesto el uso de estímulos de expresiones faciales compatibles con las condiciones naturales de interacción social en las que hay movimiento y variaciones en la intensidad emocional. En esta investigación se comparó el reconocimiento de expresiones faciales estáticas y dinámicas de alegría, miedo, rabia y tristeza, presentadas en cuatro intensidades emocionales (25, 50, 75 y 100%). El estudio incluyó a veinte voluntarios (nueve mujeres y once hombres) con edades comprendidas entre 19 y 31 años. El experimento consistió de dos sesiones, en las cuales los participantes tenían que identificar la emoción de expresiones faciales estáticas (fotografías) y dinámicas (videos) presentadas en la pantalla del ordenador. Los promedios de aciertos fueron sometidos a una Anova para medidas repetidas de modelo: 2 sexos x [2 condiciones x 4 expresiones x 4 intensidades]. Se observó una ventaja para el reconocimiento de expresiones dinámicas de alegría y miedo frente a los estímulos estáticos equivalentes (p < .05). El análisis de las interacciones mostró que las expresiones con intensidad de un 25% fueron reconocidas mejor en la condición dinámica (p < .05). La adición de movimiento contribuyó para el reconocimiento de emociones en los participantes masculinos (p < .05). Se concluyó que el efecto del movimiento varía con el tipo de emoción, intensidad de expresión y sexo del participante. Estos resultados apoyan la hipótesis de que los estímulos dinámicos tienen más validez ecológica y pertinencia en la investigación de las emociones.

Palabras clave: expresiones faciales, emoción, movimiento

Resumo

Tem sido questionada a validade ecológica de expressões faciais estáticas e intensas no reconhecimento de emoções. Estudos recentes têm proposto o uso de estímulos faciais compatíveis com as condições naturais de interação social em que há movimento e variações na intensidade emocional. No presente estudo, foi comparado o reconhecimento de expressões faciais estáticas e dinâmicas de alegria, medo, raiva e tristeza, apresentadas em quatro intensidades emocionais (25%, 50%, 75% e 100%). Participaram do estudo 20 voluntários (9 mulheres e 11 homens), com idade variando entre 19 e 31 anos. O experimento foi composto por duas sessões, nas quais os participantes deveriam identificar a emoção de expressões faciais estáticas (fotografias) e dinâmicas (vídeos) apresentadas na tela do computador. As médias de acerto foram submetidas a uma Anova para medidas repetidas de modelo: 2 sexos x [2 condições x 4 expressões x 4 intensidades]. Foi observada uma vantagem para o reconhecimento das expressões dinâmicas de alegria e medo em comparação com as equivalentes estáticas (p < .05). A análise das interações mostrou que as expressões com intensidade de 25% foram mais bem reconhecidas na condição dinâmica (p < .05). A adição do movimento contribui para melhorar o reconhecimento das emoções nos participantes do sexo masculino (p < .05). Concluí-se que o efeito do movimento varia em função do tipo da emoção, intensidade da expressão e sexo do participante. Tais resultados sustentam a hipótese de que estímulos dinâmicos possuem maior validade ecológica e adequação à pesquisa com emoções.

Palavras chave: Expressões faciais, emoção, movimento

The interpretation of facial expressions has an important role in socialization and human interaction (Trautmann, Fehr, & Herrmann, 2009). Studies indicate that individuals with facial paralysis, such as in the Moebious Syndrome, have difficulties to establish interpersonal relationships (Ekman, 1992). Similarly, patients with frontotemporal dementia, with deficits in the recognition of facial expressions, are impaired in social interaction (Keane, Calder, Hodges, & Young, 2002).

Studies on emotional recognition have used predominantly intense and static facial expressions. However, in daily life, we are faced with moving expressions, which vary in emotional intensity. For this reason, the ecological validity of the use of static faces has been questioned, which can be conceived as impoverished versions of the natural stimuli of social environment (Roark, Barret, Spence, Abdi, & O'Toole, 2003).

Studies have showed differences between dynamic and static conditions especially when the participants are asked to judge subtle facial expressions (Ambadar, Schooler, & Cohn, 2005). In general, dynamic expressions recruit more diffuse cerebral areas, which might allow a better interpretation of the stimuli (Sato, Fujimura, & Suzuki, 2008; Trautmann, Fehr, & Herrmann, 2009). Sato and Yoshikawa (2007) verified that dynamic expressions elicits greater brain activation in the visual cortex, amygdale and ventral premotor cortex, and that addition of motion improves the recognition of emotions.

Fujimura and Suzuki (2010a) found that the addition of movement contributes to the recognition of facial expressions in the peripheral visual field. In a different study, the same authors verified that expressions of happiness and fear were better recognized in the dynamic condition, concluding that the effect of the motion depends on the kind of emotion being expressed and its physical characteristics (Fujimura & Suzuki, 2010b). In both cases, authors did not used expressions varying in emotional intensity.

In a general way, theses studies indicate that the motion can influence on the recognition of emotions. However, it remains unclear what effect the motion has on the perception of subtle facial expression, probably because it is more frequent than intense expressions in social environment.

The recognition of facial expressions can also be influenced by other variables, such as the sex of participants. In general, literature has showed that women have an advantage in the recognition of emotions, when compared to men (Thayer, & Johnsen, 2000). Hampson, Anders, and Mullin (2006), for example, verified that women are more accurate in the judgment of static faces, which would be consistent with the evolutionary history of the species and gender differences.

In the present study, we investigated in both sexes the recognition of dynamic and static facial expressions of happiness, sadness, fear and anger, presented with four different emotional intensities. Variations on the type of emotion and intensity were important to evaluate whether the motion affects the recognition of the emotions globally or whether its effect is associated to other variables of the stimulus. We hypothesized that movement improves recognition of facial expressions, especially in more difficult conditions, i.e., when participants are asked to evaluate faces with low levels of emotional intensity.

Methods

Participants

The study included twenty volunteers (9 women and 11 men), students of the Federal University of Paraíba, with ages ranging from 19 to 31 years old (mean=22.6, SD=3.46 years old). By means a self-report questionnaire, participant did not reported history of neurological and psychiatric illness and had normal or corrected to normal vision. The study was approved by the Ethics Committee of the university and a written informed consent was obtained from all participants.

Material

The static stimuli consisted of color photographs of four facial expressions (happiness, sadness, fear and anger) of four models (two female and two male). Images were selected from the series NimStim Emotional Face Stimuli Database (Tottenham et al., 2009) and correspond to the models identified with the numbers 01, 04, 16 e 37.

The software Morpheus Photo Animation Suite 3.10 was used to compose static faces with the emotional intensity varying between the neutral and expressive face. The emotional intensity increases in steps of 25 % from to neutral face to the face with the maximal emotional intensity (100%) (Figure 1). The software Adobe Photoshop 7.1 was used to improve the quality of the images.

Dynamic facial expressions (movies) were generated from sequences of morphed images, which started in the neutral face and increased in steps of 1% until the expressions with 25, 50, 75 and 100% of emotional intensity. Movies were presented at a rate of 25 frames per second. Therefore, in the experiment, movies with durations of 1, 2, 3 and 4s finished, respectively, in the faces with 25, 50, 75 and 100% of intensity. The program Superlab 4.0 was used to present stimuli and collect data.

Procedure

Stimuli were presented by a computer Dell S40, with a processor Intel Core 2 Quad Q8300 (2.50 GHz, 4 MB L2 cache, 1333 MHz FSB), connected to a 22" monitor Dell D2201. Each participant was led into the experimental room, where he was asked to sit at 57 cm from the center of the computer screen.

The experiment was divided in two sessions. In the first, we presented static stimuli, which consisted of pictures of facial expressions of happiness, sadness, anger and fear in the emotional intensities of 25, 50, 75 e 100 %. In the second, we presented dynamic stimuli (movies), which started in the neutral face and advanced to the faces with the different emotional intensities. In each session, we presented 64 stimuli, as a combination of 4 facial expression (happiness, sadness, fear and anger) x 4 intensities (25, 50, 75 e 100%) x 4 models (2 male and 2 female). Stimuli were presented randomly and each participant was tested individually.

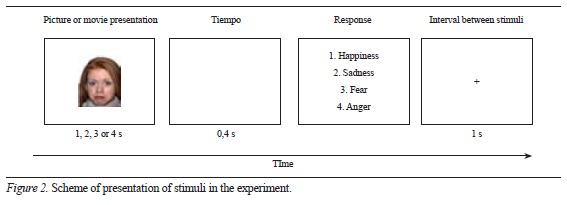

Static and dynamic stimuli were presented with specific exposition times, accordingly to the intensity of the emotion (25% = 1s, 50%=2s, 75% = 3s e 100%=4s). After stimulus presentation (picture or movie), a blank screen appeared during 0.4 seconds, being followed by a response screen. Participants responded by using the keyboard and classified the perceived emotion in one of the options: 1 - happiness, 2 - sadness, 3 - fear, and 4 - anger. After the response, a fixation point was presented during 1s, being followed by a new stimulus (Figure 2).

Results

The software PASW 18.0 was used in data analysis. Correct response means for the recognition of facial expressions were calculated (in percentage) and submitted to an Anova for repeated measures according to the model: 2 sexes x [2 experimental conditions (static and dynamic) x 4 facial expressions (happiness, sadness, fear and anger] x 4 emotional intensities (25, 50, 75, 100%)].

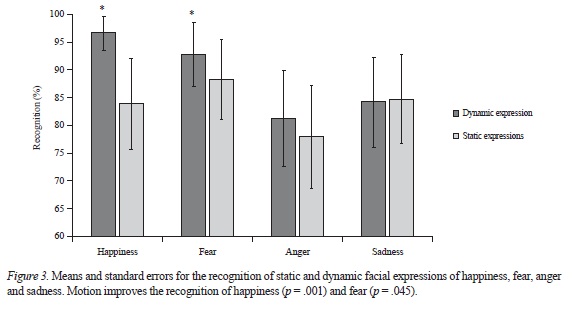

We found a statistically significant difference between the static and dynamic conditions (F119 = 15.249; p = .001), and an interaction between the factors "Experimental Conditions" and "Facial Expressions" (F357 = 5.045; p = .004). The Bonferroni post hoc test showed that dynamic expressions of happiness (p = .001) and fear (p = .045) were better recognized than the statics versions (Figure 3). In the dynamic condition, emotions of happiness and fear were recognized more precisely than anger and sadness (p < .05). For the static condition, fear was better recognized than anger (p <.05)

We found no statistically significant differences between sexes in the recognition of the emotions (F119 = 6.055; p <.494). However, the Anova showed a significant interaction between the factors "Sex" and "Experimental Condition" (F119=5.217; p <.034). The Bonferroni post hoc test indicated that men were more accurate in recognizing dynamic than static expressions (p <.001). In turn, women obtained similar scores in both conditions (p = .297) (Figure 4).

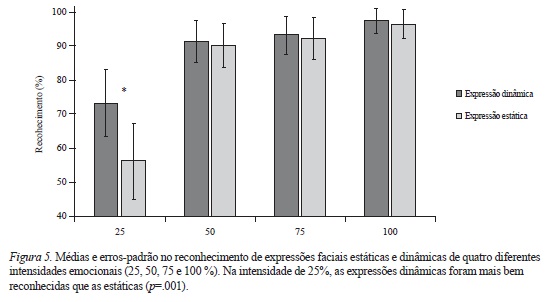

We found statistically significant differences between the different emotional intensities (F3 57 = 161.117; p= .001). The Bonferroni post hoc test showed that subtle facial expressions (25%) were worse recognized than the expressions with the higher values of intensity (50, 75 and 100%) (p = .001) (Figure 5). An interaction between the factors "Experimental Conditions" and "Emotional Intensities" was also found (F3 57 = 16.816; p < .001) and the Bonferroni post hoc indicated that dynamic expressions were better recognized than static expressions only for the intensity of 25% (p = .001).

Discussion

In this study, we analyzed the influence of the motion on the recognition of facial expressions of happiness, sadness, fear and anger displayed in different emotional intensities (25, 50, 75 and 100%). Overall, results indicated that participants were more precise to identify emotions in dynamic faces, which is consistent with other findings of literature which shows that dynamic expressions are better recognized than static expressions (Ambadar, Schooler, & Cohn, 2005; Torro-Alves, in press).

However, further analysis of the interactions between variables showed that the effect of the motion was associated to other variables of the stimulus, which were the type, intensity of emotion and sex of the participant. With regard to the type of the emotion, we found that dynamic expressions of fear and happiness were recognized more precisely than the static ones, whereas there were no differences between the recognition of dynamic and static expressions of sadness and anger. Those results indicate that the addition of motion does not benefit equally the recognition of all emotions, but its effect varies according to the type of emotion.

A distinct effect of motion on the recognition of different emotions is confirmed by other studies. Recio, Sommer and Schacht (2011), for example, found an advantage for the recognition of dynamic expressions of happiness, but no differences between static and dynamic angry faces. Fujimura and Suzuki (2010a) found a different result for facial expressions presented on the periphery of the visual field. Among the three pleasant emotions (excited, happy and relaxed) and the three unpleasant emotions (fear, anger and sadness) investigated, they found an advantage only for the recognition of dynamic expression of anger, in comparison to its static version. It is worth mentioning, however, that the method of lateralized presentation is very different from that used in the present study, which could explain the discordant results on the recognition of static and dynamic expressions of anger and fear.

As observed by Fujimura and Suzuki (2010b), the effect of the addition of motion on the recognition may depend on the physical properties of the expression. Expressions of happiness and fear have the opened mouth and mouth corners raised as marked features, whereas expressions of anger and sadness are not accompanied by so significant physical changes. Therefore, in the dynamic condition, motion becomes more salient during the transition from the neutral face and to the emotional expression.

Besides depending on the type of emotion, the influence of the motion was associated to the emotional intensity. We only found an advantage in the dynamic condition only for the perception of subtle facial expressions, i.e., with intensity values corresponding to 25% of the maximal intensity. That indicates that the presence or absence of motion does not affect the recognition of intense expressions (50, 75 e 100 %). However, when expressions are presented in shorter times and reduced intensity, the addition of motion improves the accuracy of the participant.

Those findings are consistent with the notion that motion facilitates the processing of the different types of information available on the face, such as expression, identity and sex, especially when the stimuli are degraded, i.e., submitted to filtering of spatial frequencies, reversal of colors, changes on orientation or pixelation of the image (Fiorentini & Viviani, 2011; Kátsyri, 2006).

Results showed no general differences between sexes in the recognition of the emotions. However, when the interaction between sex and condition was analyzed, we observed that men had the recognition facilitated by facial motion. Many studies show differences between sexes and it is usually attributed to women a better capacity to evaluate, express and perceive emotions (Kring & Gordon, 1998). Such differences might have arisen from the adaptive needs of the sexes and evolutionary history of the species, which would give women the primordial role in caring for offspring, being necessary, therefore, the ability to recognize quickly and accurately the affective states of children (Hampson, Anders, & Mullin, 2006). Nevertheless, results indicated that any underperformance of men to recognize emotions can be compensated by adding motion to the face. In the evaluation of dynamic faces, men and women had a similar performance.

A possible limitation of the present study is the reduced number of participants. Although we found an interaction between sex and condition (static and dynamic expressions), the increase of the sample size might clarify the role of such variables in emotional recognition. It is worthwhile to notice that we found no main effect for the relevant independent (sex) and dependent variables (motion and facial expressions). A main effect for emotional intensity was observed, but it is not important in the context of the present study, since we expected to find a relation between exposition time and accuracy. The more time participants had to judge the stimuli, the greater the precision on recognition.

The present results may guide future studies in order to investigate the relation between these variables, analyzing, for example, the emotional intensity in which the difference between dynamic and static expressions begins to occur. We used intensities of25, 50, 75 or 100%, but other researches can employ a continuous of emotional intensity increasing in steps of 10%. Likewise, it would be interesting to analyze the recognition of the basic expressions of surprise and disgust, which were not included here.

In short, the results of the present study are relevant because they indicate that the effect of the addition of motion may vary depending on the type of emotion, intensity of expression and sex of the participant that evaluate the face. It is important to note that, when we found differences between conditions, the dynamic expressions always favored the recognition of emotion, but not the contrary, confirming the hypothesis that dynamic stimuli have greater ecologic validity and adequacy to the research with emotions. Those findings can contribute to the design of further studies to investigate the role of the motion and other variables on the recognition of facial expressions in more details.

References

Ambadar, Z., Schooler, J. & Cohn, J. F. (2005). Deciphering the enigmatic face: The importance of facial dynamics to interpreting subtle facial expressions. Psychological Science, 16, 403-410. [ Links ]

Ekman, P. (1992). An argument for basic emotions. Cognition and emotion, 6, 169-200. [ Links ]

Fiorentini, C., & Viviani, P. (2011). Is there a dynamic advantage for facial expressions? Journal of Vision, 11 (3), 1-15. [ Links ]

Fujimura, T., & Suzuki, N. (2010a). Recognition of dynamic facial expressions in peripheral and central vision. Shinrigaku Kenkyu, 81 (4), 348-355. [ Links ]

Fujimura, T., & Suzuki, N. (2010b). Effects of dynamic information in recognizing facial expressions on dimensional and categorical judgments. Perception, 39 (4), 543-552. [ Links ]

Hampson, E., Anders, S. M. & Mullin, L. I. (2006). A female advantage in the recognition of emotional facial expressions: Test of an evolutionary hypothesis. Evolution and Human Behavior, 27, 401-416. [ Links ]

Kátsyri, J. (2006). Human recognition of basic emotions from posed and animated dynamic facial expressions. Unpublished doctoral dissertation. Online in pdf format: http://lib.tkk.fi/Diss/2006/isbn951228538X/isbn951228538X.pdf. [ Links ]

Keane, J., Calder, A., Hodges, J. & Young, A. (2002). Face and emotion processing in frontal variant frontotemporal dementia. Neuropsychologia, 40, 655-665. [ Links ]

Kring, A. M. & Gordon, A. H. (1998). Sex differences in emotion: Expression, experience, and physiology. Journal of Personality and Social Psychology, 74 (3), 686-703. [ Links ]

Recio, G., Sommer, W., & Schacht, A. (2011). Electrophysiological correlates of perceiving and evaluating static and dynamic facial emotional expressions. Brain Research, 1376 (0), 66-75. [ Links ]

Roark, D. A., Barret, S.E., Spence, M. J., Abdi, H. & O'Toole, A. J. (2003). Psychological and neural perspectives on the role of motion in face recognition. Behavioral and Cognitive Neuroscience Reviews, 2 (1), 15-46. [ Links ]

Sato, W., Fujimura, T. & Suzuki, N. (2008). Enhanced facial EMG activity in response to dynamic facial expressions. International Journal of Psychophysiology, 70 (1), 70-74. [ Links ]

Thayer, J. F., & Johnsen, B. H. (2000). Sex differences injudgement of facial affect: A multivariate analysis of recognition errors. Scandinavian Journal of Psychology, 41, 243-246. [ Links ]

Torro-Alves, N. (2013). Reconhecimento de expressões faciais estáticas e dinâmicas: um estudo de revisão. Estudos de Psicologia (no prelo). [ Links ]

Tottenham, N., Tanaka, J., Leon, A. C., Mccarry, T., Nurse, M., Hare, T. A., et al. (2009). The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Research, 168 (3), 242-249. [ Links ]

Trautmann, S. A., Fehr, T. & Herrmann, M. (2009). Emotions in motion: Dynamic compared to static facial expressions of disgust and happiness reveal more widespread emotion-specific activations. Brain Research, 284, 100-115. [ Links ]

Yoshikawa, S. & Sato, W. (2006). Enhanced perceptual, emotional, and motor processing in response to dynamic facial expressions of emotion. Japanese Psychological Research, 48 (3), 213-222. [ Links ]